Weimin Zhou

Image Dehazing Enhancement Algorithm Based on Mean Guided Filtering

Abstract: To improve the effect of image restoration and solve the image detail loss, an image dehazing enhancement algorithm based on mean guided filtering is proposed. The superpixel calculation method is used to pre-segment the original foggy image to obtain different sub-regions. The Ncut algorithm is used to segment the original image, and it outputs the segmented image until there is no more region merging in the image. By means of the mean-guided filtering method, the minimum value is selected as the value of the current pixel point in the local small block of the dark image, and the dark primary color image is obtained, and its transmittance is calculated to obtain the image edge detection result. According to the prior law of dark channel, a classic image dehazing enhancement model is established, and the model is combined with a median filter with low computational complexity to denoise the image in real time and maintain the jump of the mutation area to achieve image dehazing enhancement. The experimental results show that the image dehazing and enhancement effect of the proposed algorithm has obvious advantages, can retain a large amount of image detail information, and the values of information entropy, peak signal-to-noise ratio, and structural similarity are high. The research innovatively combines a variety of methods to achieve image dehazing and improve the quality effect. Through segmentation, filtering, denoising and other operations, the image quality is effectively improved, which provides an important reference for the improvement of image processing technology.

Keywords: Image Dehazing , Image Enhancement , Mean-Guided Filtering , Ncut Algorithm , Transmittance

1. Introduction

In the face of rain and snow, heavy fog and other special harsh environments, there will be problems such as blurring and fogging in the image acquisition. Traffic monitoring system, intelligent driving system and other fields relying on visual image technology are facing huge difficulties. Relevant experts at home and abroad have done a lot of research on this [1]. Ye et al. [2] proposed an improved dark channel image dehazing method combining image bright area detection and image enhancement. The image enhancement method was used to further optimize the restored image to solve its low brightness. You et al. [3] proposed a polarization image dehazing enhancement algorithm based on the dark channel prior principle by taking advantage of polarization imaging and combining the dark channel prior principle. Based on the atmospheric physical degradation model, image dehazing and enhancement were realized. He et al. [4] proposed an image enhancement dehazing algorithm based on guidance coefficient weighting and adaptive image enhancement. The restored image was combined with the atmospheric scattering model, and the adaptive linear contrast enhancement method was used to optimize the restored image. Fu et al. [5] proposed an ordinary differential equation inspired multi-level feature gradual thinning and edge enhancement dehazing algorithm. It was superimposed on the defog image to obtain the enhanced edge, retaining the final defog result of details.

To sum up, the common image dehazing processing technology keeps the jump of abrupt regions when processing image noise, which leads to insignificant improvement in image quality and still prominent details. To further improve the effect of image dehazing and enhance the image quality, an image denoising and enhancement algorithm based on mean guided filtering was proposed in the research. The algorithm innovatively established image denoising and enhancement model according to the prior rule of dark channel. At the same time, median filter with low computational complexity was used to denoise the image in real time, keeping the jump of abrupt regions, and realizing image denoising and enhancement. Compared with the traditional image dehazing methods, keeping the jump of abrupt regions can further enhance the image processing ability, which has important reference significance for improving the visual image technology.

2. Image Preprocessing

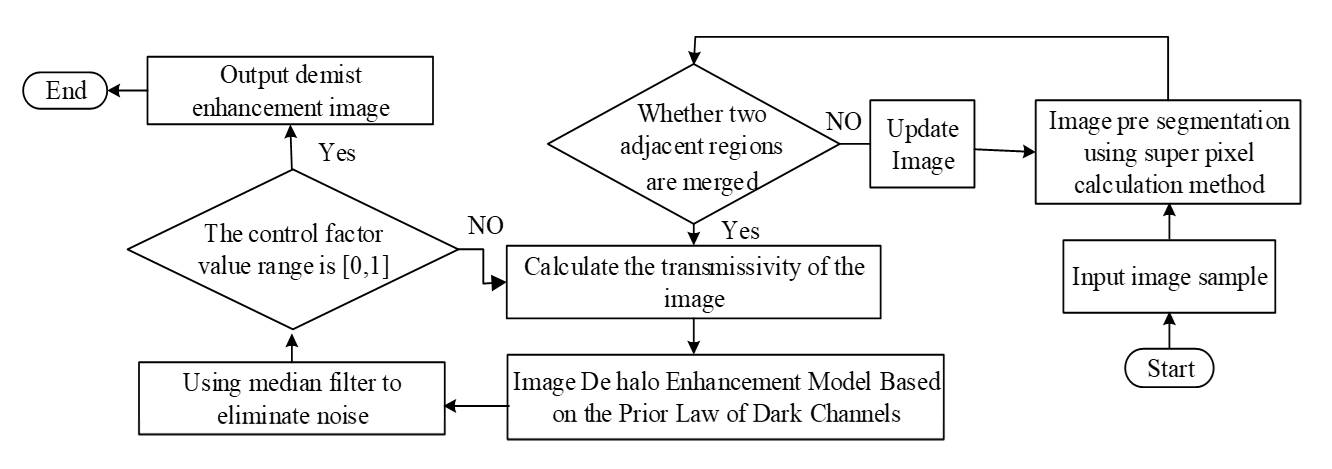

The method flow designed in this paper is shown in Fig. 1.

Step 1 is to input image samples; Step 2 is to pre segment the image by using the super pixel computing method; Step 3 is to judge whether to merge two adjacent regions [6]; Step 4 is to calculate the transmissivity of the image; Step 5 is to establish an image denoising and enhancement model based on the prior rules of dark channels; Step 6 is to judge whether the control factor value range is [0,1]. If yes, it needs to go back to Step 5. Otherwise, it needs to proceed to the next step; Step 6 is to output the enhanced dehazing image and end the process [7].

2.1 Pre-segmentation

Since natural images are easily affected by factors such as illumination, pre-segmentation is performed before image processing. At present, the methods widely used in pre-segmentation are super pixel calculation method and watershed segmentation algorithm. Due to the poor robustness of the watershed algorithm, the super pixel calculation method was selected in this paper [8]. The super pixel method is used to divide the image into several sub-regions. Since these sub-regions are composed of a series of adjacent pixels with similar features such as color and texture, these sub-regions are less susceptible to light than edges and pixels [9]. It is beneficial to obtain better image enhancement effect under the influence of external factors.

2.2 Image Segmentation Implementation

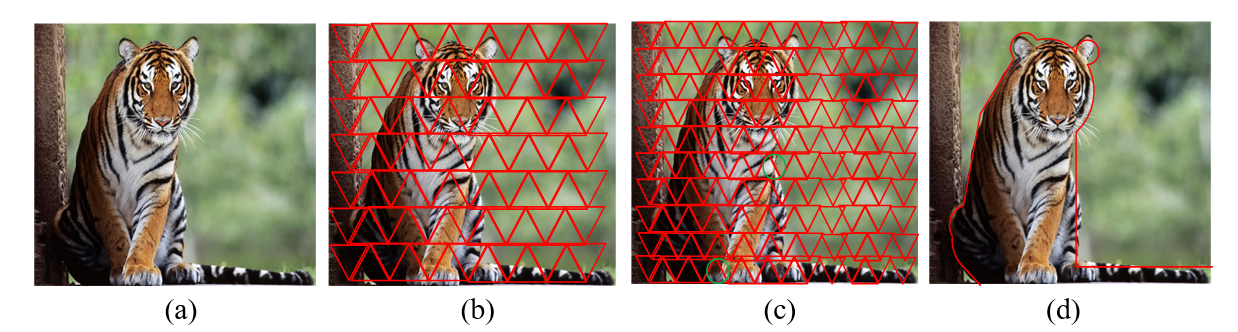

Taking an actual image (Fig. 2) as an example, the specific steps of image segmentation are given.

Fig. 2.

(1) The Ncut algorithm is used to pre-segment the original image to obtain area [TeX:] $$a_1, a_2, \ldots, a_n$$, which contains some extremely small areas, as shown in Fig. 1(b) [10].

(2) Under the condition of satisfying formula (1), it deletes the minimal area in the initial segmentation, as shown in Fig. 1(c), and marks the differences of some corresponding positions in Fig. 1(b) and 1(c) with a green circle.

In the formula (1), m and n represent the length and width of the original image respectively; [TeX:] $$N_{a_i}$$ represents the number of pixels in area [TeX:] $$a_i$$; M means the total amount of areas obtained by the Ncut algorithm. Since the very small area in the initial segmentation has little effect on the overall segmentation, it can improve the computational efficiency of subsequent steps after deletion [11].

(3) It calculates the consistency and similarity of different regions according to formulas (2) and (3) [8]:

In the formula (2), [TeX:] $$Z\left(a_i\right)$$ is the amount of mutual information in the region; [TeX:] $$T(x, y)$$ denotes the phase consistency of different regions, where x and y indicate the neighborhood and structural information, respectively [12].

(4) It determines whether the two adjacent regions are merged, and then determines whether the merger is stopped.

(5) It needs to update the image, repeat steps (2)–(4) until there are no more regions merged in the image, and output the image, as shown in Fig. 1(d).

3. Image Dehazing Enhancement Processing

Based on the image segmentation results, the transmittance of the image that needs to be dehazed is further solved, and the image edge detection results are obtained through the mean-guided filtering method. A linear model is established according to the detection results to achieve image dehazing enhancement.

3.1 Transmittance Solution

First, it selects the minimum value in the three channels of GRB corresponding to the pixel point of the image to obtain the dark image. Then it selects the minimum value in the local small block of the dark image as the value of the current pixel point, which obtains the dark primary color image [13]. The expression is as follows:

(4)

[TeX:] $$I_a(s)=\min _{e \in\{G, R, B\}}\left\{\min _{a \in M}\left[\left(f_a+u_a\right)\right]\right\} \times S_{a_i}^n .$$In the formula (4), [TeX:] $$f_a$$ represents the color channel; [TeX:] $$u_a$$ expresses the local patch centered on the region a. To solve the restored image by formula (4), it is necessary to obtain the transmittance w(t) and the atmospheric light vector H. First, it estimates the transmittance w(t). It assumes that the atmospheric light vector H is known and the w(t) in the local small block [TeX:] $$u_a$$ is constant, it takes two minimum operations on both sides of formula (4) and divided by H to obtain formula (5) [14]:

(5)

[TeX:] $$G_a=1-\min _{a \in\{G, R, B\}}\left\{\min _{a \in M}\left[\frac{g(a)}{H}\right]\right\}.$$w(t) is constant within the local block [TeX:] $$u_a$$, which can be written outside the min operator and denoted as [TeX:] $$w_i(t).$$ Since H is a positive value, the formula (4) obtained from the dark primary color prior is brought into formula (5) to obtain the following formula (6):

The second term on the right side of formula (6) is the dark channel of the normalized image [TeX:] $$d^2(a) / H^2$$. In an actual fog-free scene, there will still be fog in areas with far depth of field [15]. Therefore, parameter r=0.95(0<r<1) is introduced to reduce the dehazing and make the restored scene more realistic.

The transmittance [TeX:] $$w_i(t)$$ is rewritten as:

In the formula (7), [TeX:] $$L_i \text { and } L_j$$ both mean the sparse features of hazy images.

3.2 Implementation of Image Dehazing Enhancement

According to the dark channel prior law, each local block of most outdoor haze-free images must have at least one pixel with a very low intensity value of the color channel. The following classic image dehazing enhancement model can be established:

In the formula (8), I(t) is the image with fog; O(t) denotes the image without fog; C(t) indicates the atmospheric light value of the entire image; medium transmittance; O(t)C(t) expresses the attenuation term of the light of the object in the propagation process; [TeX:] $$\Phi(1-C(t))$$ refers to the atmospheric light component, that is, the fog concentration. This model removes the spatially isotropic fog by estimating the fog concentration and subtracting the fog component from the foggy image [16]. The algorithm in this paper is based on the above classic image dehazing model [17]. Now that I(t) is known, it is required to solve the haze-free image O(t) under ideal lighting conditions. To simplify the calculation and control the number of parameters, the atmospheric light component is recorded as formula (9):

Transforming formulas (8) and (9) can get formula (10):

To protect the edge of the image and remove the noise in the transmittance map, it is necessary to smooth the transmittance map [18]. After comparing the advantages and disadvantages of several filters, this paper used the median filter with lower computational complexity to come and go noise and keep the jumps in the mutated regions [19]. After estimating the global atmospheric light [TeX:] $$\Psi(t)$$ and fog concentration using the dark channel principle, the image O(t) is recovered using the physical model formed by the fog image:

The specific implementation steps of image dehazing enhancement are as follows:

(1) It defines the atmospheric light value [TeX:] $$\Psi(t)=\Phi-(1-C(t)),$$ called the fog concentration, and estimates the atmospheric light value [TeX:] $$\Psi(t).$$

(2) The dark channel is calculated and median filtering on the dark channel is performed.

(3) Fog-free and fog-free areas are distinguished by local standard deviation.

(4) It controls the degree of image dehazing.

(5) Since not all parts of the image have fog, it is necessary to treat the regions with good contrast differently. To smooth the image while retaining the boundary part in the image, step (4) is used to shield the regions that do not need dehazing, making the results more robust [20].

(6) A control factor is introduced, whose value range is [0, 1]. As the value increases from small to large, the dehazing ability becomes stronger. This factor can effectively control the dehazing effect and avoid the final result. Dehaze is too weak to meet requirements or too strong to look unnatural.

4. Experimental Studies

To verify the effectiveness and rationality of the image dehazing enhancement algorithm based on mean guided filtering, different types of images were selected for testing, these images had rich detail information and depth of field information.

4.1 Experimental Environment and Dataset

This experiment was implemented using PyTorch. The full-reference evaluation index algorithm was implemented using Python, and the non-reference evaluation index algorithm was implemented using MATLAB. The specific experimental environment is shown in Table 1.

The RESIDE dataset and the 2018 NTIRE-specified dataset were divided into training and test sets as needed. The details of the experimental data are shown in Table 2.

Table 1.

| Index | Operating System | CPU | PyTorch version | Python version | MATLAB version | RAM |

|---|---|---|---|---|---|---|

| Parameter | Linux Mint 20 | 2.40 GHz | 1.2.0 | 3.6.10 | MATLAB r2020a | 8 GB |

Table 2.

| Category | Name | Fog image volume | Fog free image volume |

|---|---|---|---|

| Training set | ITS | 8,563 | 2,610 |

| I-HAZE | 470 | 130 | |

| Test set | O-HAZE | 1,249 | 307 |

| SOTS | 109 | 23 | |

| HSTS | 567 | 89 | |

| RTTS | 95 | 10 |

Under the setting of the above experimental environment and dataset, the experimental research was carried out. The improved dark channel image dehazing method [2], the polarization image dehazing enhancement algorithm [3], the image enhancement dehazing algorithm [4] and the multi-level feature gradual thinning and edge enhancement dehazing algorithm [5] were compared with the proposed algorithm. The comparison results are analyzed as follows.

4.2 Analysis of Experimental Results

A foggy image was arbitrarily selected in the dataset, and the dehazing enhancement algorithm for polarized images based on the dark channel prior principle, the image enhancement dehazing algorithm based on weighted and adaptive guidance coefficients and the proposed algorithm were used for dehazing enhancement processing. The results are shown in Fig. 3.

According to Fig. 3, the visual effect of the proposed algorithm was better, which could not only effectively achieve the effect of dehazing, but also highlighted the details of the scene in bright places, and maintained the details of the scene in the deeper colors. The visual effect of the image was obviously better than that obtained by the traditional algorithm, the color fidelity was high, and the structural information was clear.

Fig. 3.

To further measure the image quality, this study used information entropy, peak signal-to-noise ratio (PSNR), structural similarity and other evaluation indicators. The calculation formula is as follows.

In the formula (12), [TeX:] $$P\left(\rho_i\right)$$ is the probability of [TeX:] $$\rho_i ; L_i$$ means the number of gray levels of the image. The larger the entropy value of the image, the greater the amount of information, and the richer the detailed information of the image.

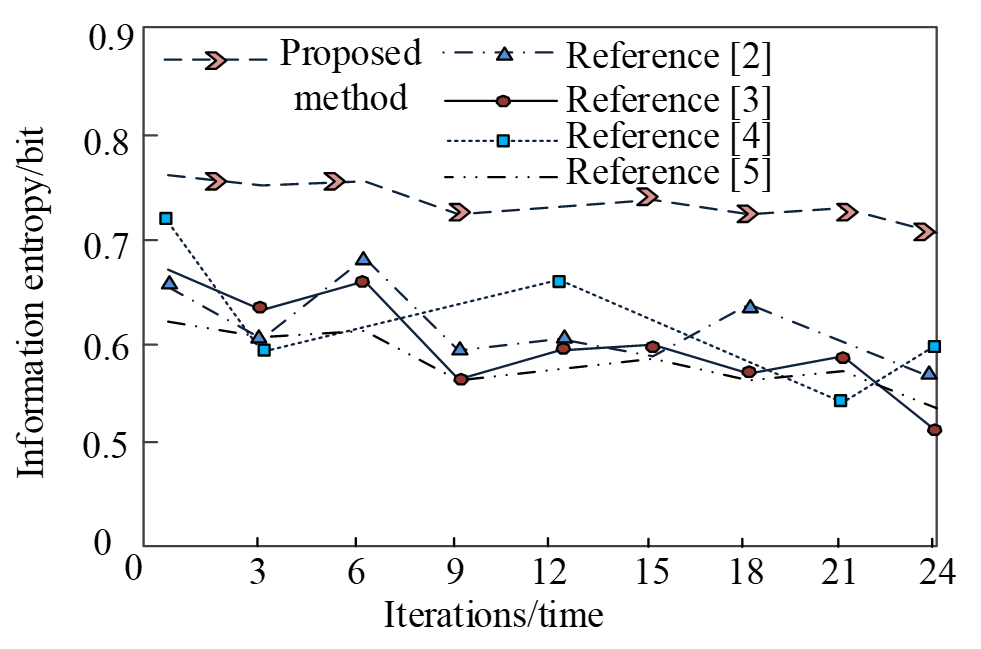

The information entropy after image processing by applying five methods is shown in Fig. 4.

PSNR is the most widely used evaluation index in the field of image processing. The greater its value, the smaller the degree of deterioration of the processed image and the less distortion compared with the original image. The PSNR is calculated as follows:

In the formula (13), [TeX:] $$\varsigma$$ expresses the number of sampling bits, which is usually set to 8.

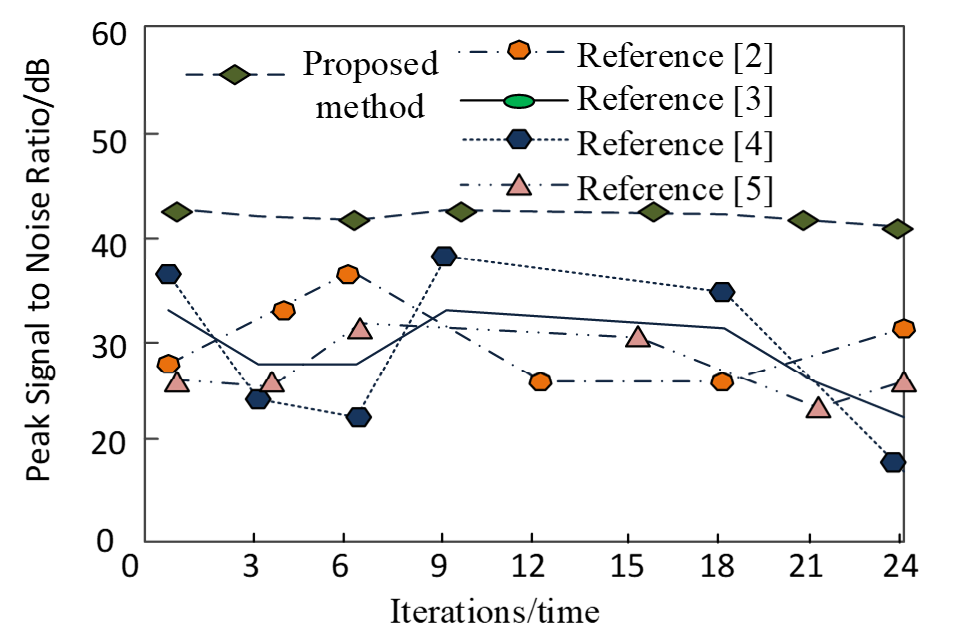

The PSNR after applying five methods is shown in Fig. 5.

Structural similarity is used to evaluate the ability of an algorithm to preserve structural information, and the higher the value, the better. Given two images [TeX:] $$I_1 \text { and } I_2 \text {, }$$ the structural similarity calculation formula is as follows:

(14)

[TeX:] $$\operatorname{SSIM}\left(I_1, I_2\right)=\frac{\left(\varphi_{I_1} \varphi_{I_2}+\tau_1\right)\left(\pi_{I_1 I_2}+\tau_2\right)}{\left(\varphi_{I_1}^2+\varphi_{I_2}^2+\tau_1\right)\left(\left(\pi_{I_1}^2+\pi_{I_2}^2+\tau_2\right)\right)}.$$In the formula (14), [TeX:] $$\varphi_{I_1} \text { and } \varphi_{I_2}$$ represent the average of [TeX:] $${I_1} \text { and } {I_2}$$ respectively; [TeX:] $$\pi_{I_1}^2 \text { and } \pi_{I_2}^2$$ stand for the variance of [TeX:] $${I_1} \text { and } {I_2}$$ respectively; [TeX:] $$\pi_{I_1 I_2}$$ indicate the covariance of [TeX:] $${I_1} \text { and } {I_2}$$; [TeX:] $$\tau_1 \text { and } \tau_2$$ both indicate constants.

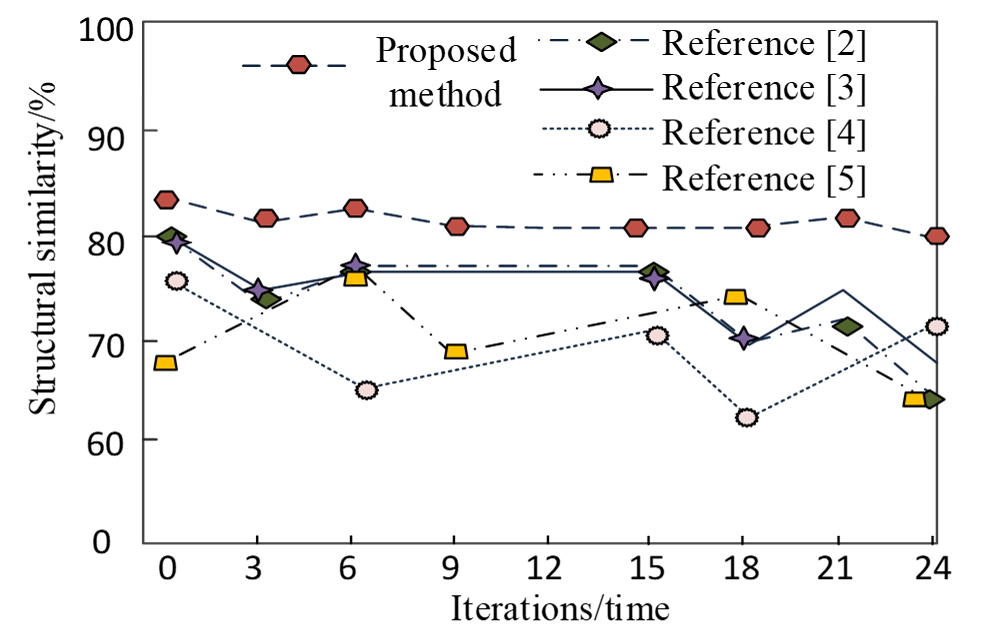

The structural similarity after applying five methods is shown in Fig. 6.

Combined with the above objective evaluation results, the information entropy of the paper design algorithm was higher than 0.7bit, the peak SNR was higher than 40dB, and the structure similarity wad higher than 80%. The above indexes were higher than the other four traditional algorithms, which could effectively improve the evaluation index, obtain better image color, and improve the effect of the image recovery.

5. Conclusion

To improve the image restoration effect and solve the unclear restoration of details, low degree of tone restoration and loss of image details, an image dehazing enhancement algorithm based on mean-guided filtering was proposed. The main research results are as follows:

(1) The proposed algorithm had better visual effect, high color fidelity and clear structural information, and its image visual effect was obviously better than that obtained by traditional algorithms. (2) The information entropy, PSNR and structure similarity of the proposed algorithm were higher than those of the traditional algorithm. In this algorithm, the information entropy was higher than 0.7bit, the peak SNR was higher than 40dB, and the structural similarity was higher than 80%. Therefore, better image dehazing effect could be obtained and the image quality could be guaranteed. (3) Through the implementation of image dehazing, compared with the traditional dehazing algorithm, the proposed algorithm added a median filter to deal with image details. At the same time, to ensure the image quality, it maintained the jump of abrupt regions during noise processing. The final experimental results also showed that the proposed algorithm was superior to other algorithms in image detail processing, demisting effect, etc. However, the algorithms studied also have shortcomings. In image dehazing, the processing of image denoising due to environmental changes has not been fully considered. Later, more complex environmental issues need to be considered to improve the overall performance of the algorithm.

Biography

Weimin Zhou

https://orcid.org/0000-0001-7772-1402He born in Taizhou in 1980, is a doctoral student in control science and engineering of Zhejiang University of Technology and a computer teacher of Taizhou Vocational and Technical College, Zhejiang, China. He received the bachelor’s degrees in information and computing sciences from the Chongqing Three Gorges University in 2003, and the master’s degrees in electronics and communication engineering from the Anhui Uni-versity in 2011. His main research interests are computer vision and image processing.

References

- 1 V . Monga, Y . Li, and Y . C. Eldar. "Algorithm unrolling: interpretable, efficient deep learning for signal and image processing," IEEE Signal Processing Magazine, vol. 38, no, 4, pp. 18-44, 2021.doi:[[[10.48550/arXiv.1912.10557]]]

- 2 Z. Ye, R. Jia, L. Ning, J. Fu, and L. Cui, "Image defogging enhancement method based on bright region detection," Missiles and Space V ehicles, vol. 2021, no. 1, pp. 115-120, 2021.custom:[[[-]]]

- 3 J. You, P . Liu, X. Rong, B. Li, and T. Xu, "Dehazing and enhancement research of polarized image based on dark channel priori principle," Laser Infrared, vol. 50, no. 4, 493-500, 2020.custom:[[[-]]]

- 4 L. He, G. Zhou, B. Yao, X. Zhao, and X. Li, "A haze removal algorithm based on guided coefficient weighted and adaptive image enhancement method," Microelectronics & Computer, vol. 37, no. 9, pp. 73-77+82, 2020.custom:[[[http://www.journalmc.com/en/article/id/70ad60b8-2a3d-4860-87ab-4609da2bcb2f]]]

- 5 Y . Fu, S. Yin, Z. Deng, Y . Wang, and S. Hu, "Multi-level features progressive refinement and edge enhancement network for image dehazing," Optics and Precision Engineering, vol. 30, no, 9, pp. 1091-1100, 2022.doi:[[[10.1016/j.image.2022.116719]]]

- 6 H. Filali and K. Kalti, "Image segmentation using MRF model optimized by a hybrid ACO-ICM algorithm," Soft Computing, vol. 25, no. 15, pp. 10181-10204, 2021.custom:[[[https://link.springer.com/article/10.1007/s00500-021-05957-1]]]

- 7 X. Zhou, X. Luo, and X. Wang, "Optimization of multi threshold image segmentation using improved state transition algorithm," Computer Simulation, vol. 39, no, 1, pp. 486-493, 2022.custom:[[[-]]]

- 8 A. Amelio, "A new axiomatic methodology for the image similarity," Applied Soft Computing, vol. 81, article no. 105474, 2019. https://doi.org/10.1016/j.asoc.2019.04.043doi:[[[10.1016/j.asoc.2019.04.043]]]

- 9 Z. Jiang, X. Sun, and X. Wang, "Image defogging algorithm based on sky region segmentation and dark channel prior," Journal of Systems Science and Information, vol. 8, no. 5, pp. 476-486, 2020.doi:[[[10.21078/JSSI-2020-476-11]]]

- 10 Z. Chen, B. Ou, and Q. Tian, "An improved dark channel prior image defogging algorithm based on wavelength compensation," Earth Science Informatics, vol. 12, pp. 501-512, 2019.custom:[[[https://link.springer.com/article/10.1007/s12145-019-00395-y]]]

- 11 Y . Cui, S. Zhi, W. Liu, J. Deng, and J. Ren, "An improved dark channel defogging algorithm based on the HSI colour space," IET Image Processing, vol. 16, no. 3, pp. 823-838, 2022.doi:[[[10.1049/ipr2.12389]]]

- 12 N. Hassan, S. Ullah, N. Bhatti, H. Mahmood, and M. Zia, "A cascaded approach for image defogging based on physical and enhancement models," Signal, Image and Video Processing, vol. 14, no. 5, pp. 867-875, 2020.custom:[[[https://link.springer.com/article/10.1007/s11760-019-01618-x]]]

- 13 D. Fan, X. Lu, X. Liu, W. Chi, and S. Liu, "An iterative defogging algorithm based on pixel-level atmospheric light map," International Journal of Modelling, Identification and Control, vol. 35, no. 4, pp. 287-297, 2020.custom:[[[10.1504/IJMIC.2020.114787]]]

- 14 N. Sharma, V . Kumar, and S. K. Singla, "Single image defogging using deep learning techniques: past, present and future," Archives of Computational Methods in Engineering, vol. 28, pp. 4449-4469, 2021.doi:[[[10.1007/s11831-021-09541-6]]]

- 15 S. He, Z. Chen, F. Wang, and M. Wang, "Integrated image defogging network based on improved atmospheric scattering model and attention feature fusion," Earth Science Informatics, vol. 14, pp. 2037-2048, 2021.custom:[[[https://link.springer.com/article/10.1007/s12145-021-00672-9]]]

- 16 G. Li and C. Wang, "A single image defogging algorithm for sky region recognition based on binary mask," in Proceedings of SPIE 12247: International Conference on Image, Signal Processing, and Pattern Recognition (ISPP 2022). Bellingham, W A: International Society for Optics and Photonics, 2022, pp. 127132.doi:[[[10.1117/12.2636832]]]

- 17 C. Kong, "Review of two typical image defogging algorithms and related improvements," Frontiers in Science and Engineering, vol. 1, no. 7, pp. 132-134, 2021.custom:[[[10.29556/FSE.202110_1(7).0020]]]

- 18 N. S. Murthy and S. K. Jainuddin, "An improved dark channel prior based defogging algorithm for video sequences," Journal of Information and Optimization Sciences, vol. 42, no. 1, pp. 29-39, 2021.custom:[[[https://www.tandfonline.com/doi/abs/10.1080/02522667.2019.1643562]]]

- 19 W. Xiao, Z. Tang, C. Yang, W. Liang, and M. Y . Hsieh, "ASM-V oFDehaze: a real-time defogging method of zinc froth image," Connection Science, vol. 34, no. 1, pp. 709-731, 2022.doi:[[[10.1080/09540091.2022.2038543]]]

- 20 W. Zhu, "Sichuan mountainous environment and urban planning based on image dehazing algorithm," Arabian Journal of Geosciences, vol. 14, article no. 1829, 2021. https://doi.org/10.1007/s12517-021-08125-9doi:[[[10.1007/s12517-021-08125-9]]]